Difference between revisions of "Webapps with python"

| (23 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

==Webapps with python== | ==Webapps with python== | ||

For a long time, the tradition of providing technical solutions was the experts to do the designs and implement the designs for the benefit of the society. At a later stage, we started practising ‘awareness raising’. While this was a step in the right direction, by attempting to describe the why’s what’s and how’s of a technical solution to the societal stakeholders, awareness-raising often worked as an afterthought. A large body of evidence has shown that the best outcomes can be achieved by involving the community from day one of a technical solution. This day one is the planning and design stage. Whether it is money management in a family, a piece of policy in a government or an institution or any type of technical solution, best stakeholder support is obtained when people co-own the product. The journey to co-ownership starts with co-discovery (of knowledge), co-design and implementing together. | |||

===[http://er. | This applies to any type of technical solution. However, it becomes a non-negotiable requirement for success in climate and nature-based solutions. By their very nature, both the problem and its solutions are distributed in nature. Delivery of electricity from a customer from a thermal power plant also involves dealing with the ‘users’ – we call this customer management. But when we go for household level, grid-connected, solar electricity generation, that customer must become a business partner! That is the transformation we are witnessing in many sectors addressing problems with climate and nature-based solutions. This is how people are empowered to do designs. The pandemic is a portal, to make the wrongs right, and to build back better and greener. | ||

<div style="overflow: hidden">[[File:raingauge_stations_europe.png|center|thumb|center|350px|Interactive map, analysis and plotting tool for precipitation]] | |||

[https://www.ecad.eu/ European Climate Assessment & Dataset project] managed by Royal Netherlands Meteorological Institute (KNMI), collects meteorological data (pressure, humidity, wind speed, cloud cover and precipitation - see [https://www.ecad.eu/dailydata/datadictionaryelement.php here] for the complete description) from thousands of observation stations from (at the time of writing) 63 countries. As of | One of the surefire ways of creating co-ownership is to encourage co-discovery and co-design. For this, we face the challenge of bringing modern technological knowledge to the stakeholders, including communities, in an understandable way but yet allowing for them to interact and contribute in a meaningful sense. One of the modern tools that contribute to this mission is interactive web applications. They allow water managers and scientists to bring complex data analysis solutions, big-data technologies, dynamic water models closer to the non-specialist stakeholders in an appealing and simple-to-interact fashion. | ||

==Demonstrations== | |||

Here are some prototype web applications that were created for the water management, agriculture and asset management sectors. They are written in python using libraries like [https://plotly.com/dash/ Plotly dash] and [http://www.web2py.com/ web2py] to do the frontend. I use [https://auth0.com/blog/hosting-applications-using-digitalocean-and-dokku/ docker containers] based on [http://dokku.viewdocs.io/dokku/ dokku] -- [https://en.wikipedia.org/wiki/Platform_as_a_service a PaaS (Platform as a Service)] --to host these apps. | |||

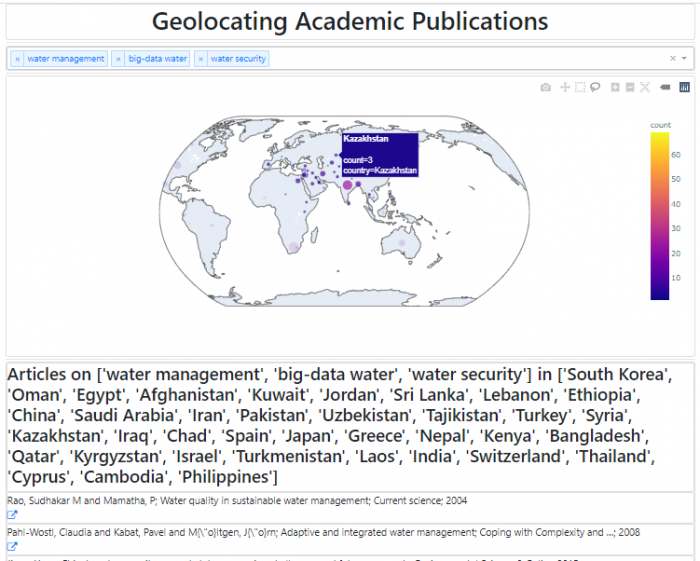

===[https://gs.srv.pathirana.net/ Geohacking publications with Python Natural Language Processing and Friends]=== | |||

<div style="overflow: hidden">[[file:nlpgeohacking_publications.png|thumb|center|700px|Google Scholar results on the map [https://gs.srv.pathirana.net/ LINK]]]</div> | |||

==== What is this and how it works==== | |||

Using Natural Language Processing to 'Geoparsing' google scholar search results. Select a keyword (or more) and see where the publications refer to. Click on a bubble of a country to see its publications listed below the map. | |||

====Background==== | |||

Python provides all the tools needed to do Natural Language Processing, including | |||

* Web scraping e.g. [https://pypi.org/project/beautifulsoup4/ BeautifulSoup] | |||

* Parsing and identifying entities e.g. [https://www.nltk.org/ NLTK toolkit] | |||

* Flag geographical locations mentioned in the text and geolocating them (Geoparsing). e.g. [https://github.com/openeventdata/mordecai Mordecai], [https://pypi.org/project/geograpy3/ geography3] and | |||

(simple) [https://pypi.org/project/geotext/ geotext]. | |||

====What it does==== | |||

* Downloads Google Scholar search hits for each keyword (In this demo, I have limited each to 500 top hits, to keep things simple) | |||

* Store them in a NoSQL database (MongoDB) | |||

* Run a geoparser (geotext in this case) to locate mentions of countries in the title or the abstract. | |||

* Feed the data to this app, so that the user can interactively look at them. | |||

====How to use==== | |||

* (After closing these instructions) Select a keyword. The locations of the publications will be shown on the map. A list of all the publications will be shown below the map. | |||

* Click on the bubbles on the map to filter by country. Then the list will be updated to cover only that country. | |||

* It is possible to select more than one keyword (simply select from the dropdown list) | |||

* It's also possible to select several countries. Either SHIFT+Click on the map or use the select tools (top-right). | |||

* Click on the link below each record to see it on google scholar. | |||

====What is missing==== | |||

* Many publications concerning the United States of America, typically does not write the country name (e.g. A statewide assessment of mercury dynamics in North Carolina water bodies and fish). | |||

NLP tools are usually smart enough to detect these (North Carolina is in the USA so tag as 'USA'), but the current (demo) implementation misses some obscure names. | |||

* 'The United Kingdom vs. England' tagging is complicated. This issue has to be fixed (That's why no articles are tagged for England). | |||

====Next step?==== | |||

This demo provides a framework for Natural Language Processing of online material to make sense of information (e.g. geoparsing). | |||

It combines several Big-data constructs (Unstructured data, NoSQL (Jason) data lakes, NLP tricks). | |||

While a web app is not the right place to scale up these to the big-data level, the framework presented here can easily be implemented to do large-scale processing using a decent cluster computer system. | |||

With large scale applications some of the possibilities are: | |||

* Identify temporal trends in publications. | |||

* Locate 'hotspots' as well as locations with few (or no) studies (geographical gaps) | |||

===[https://waterdetect.srv.pathirana.net/ Seasonal levels in waterbodies using Sentinel-2 data ]=== | |||

End-to-end automated system for calculating seasonal water availability in practically any waterbody. | |||

<div style="overflow: hidden">[[File:water-detect-webapp.png|center|thumb|center|500px|Interactive map, analysis and plotting tool for precipitation. [https://waterdetect.srv.pathirana.net/ LINK]]] | |||

====Background==== | |||

Sentinel-2 s an Earth observation mission from the Copernicus that systematically acquires multispectral, optical imagery at high spatial resolution (10 m to 60 m) over land and coastal waters. | |||

Sentinelhub (a partner of Euro Data Cube consortium), operated by a private IT company, provides cloud services for sentinel-2 data. This provides an excellent way to access (petabytes of) data using a Python-based API. Eo-learn is a collection of open-source Python packages that have been developed to access and process Spatio-temporal satellite image sequences. | |||

This web application shows how these technologies can be integrated to provide cloud-based data services in a manner that can be used by non-experts. | |||

====What it does==== | |||

It uses [https://sentinel.esa.int/web/sentinel/missions/sentinel-2 Sentinal-2] images (specifically RGB and near-infrared bands - or B02, B03, B04 and B08) to identify water. It goes through a series of images (taken at different times) of the location of interest and calculate the fraction of area covered by water. This provides an easy way to estimate the water amount in reservoirs, lakes and other water bodies. (Note: However, what this application calculates is the fraction (Area covered with water)/(a nominal area). So to really convert it to water volumes, we need the land contours of the reservoir.) | |||

====How it works==== | |||

User provides a water body by marking it with a polygon by clicking on the map. Gives the location a name and submit it for processing by pressing the 'Request' button. | |||

An automated processing engine is waiting in the background. (However, see the limitation below for a caveat!). Once the user submits the request, it will queue it with many other requests (made by multiple users) and process them. This is a numerically expensive (and costing a lot of network bandwidth) task for the server. | |||

The user visits the site later (and press 'Refresh' button) to check if the submitted job is finished. If it is it will appear in the table below. | |||

The user can select a result set by clicking on a tick-box on the left. Then the time-series of the water area fraction will appear at the bottom graph. | |||

Click on the graph points to see the satellite images overlayed on the map. (use Gain and Opacity to finetune how it looks). | |||

====Current limitations==== | |||

While the web-application system can do this whole process automatically and upscale really well (responding many user requests at the same time for example), it cost processing power, network bandwidth and sentinel-hub processing units (which have to be paid). Currently my server (which runs more than 15 web applications) has only 8GB of RAM. For the backend to work well it needs about 32GB. Network bandwidth is not a problem. However, sentinel hub processing units are. Currently I am using their 'trial' account to demonstrate this. However, for the system to scale-up a paid account is needed. | |||

So at the moment, I am running this system in a limited mode. | |||

User submitted jobs do not directly go to the processing engine. Instead, an admin (currently me) have to 'approve' them. (I can do this by clicking on the same table after authenticating). Then it will automatically be processed by the backend system. Why: My account at the sentinel hub has very limited resources. | |||

While the system processes all satellite images available covering a period, only the first 10 are saved as images. (Try clicking on points on the time-series graph, only the first 10 will show the corresponding image on the map.) | |||

Currently I am using a simple water detection method (Otsu threshold method). | |||

Due to the limited resources of the server the system is not very responsive at the moment. The client application needs optimising too. | |||

====What you can do (currently)==== | |||

Feel free to experiment with the job submission system. | |||

Examine already processed result sets. | |||

====Technologies used==== | |||

The frontend (what you see) is implemented on Flask and Plotly-dash systems. Maps are provided by OpenStreetMap with Leaflet interactive map library. Plotly was used to draw the graphs. The system runs on a Linux Virtual Private Server with two vCores and 8GB of RAM. The processing engine (the system that talks with the sentinel hub and processes satellite data, does the calculations and convert the results to something that can be understood by the front end) is implemented as a systemd service. | |||

The administration system is secured with two-factor authentication, along with a time-based OTP (onetime password) system. | |||

====Next step?==== | |||

These type of end-to-end automated data processing systems provide great opportunities to apply machine learning techniques like the convolutional neural networks to generate higher-level knowledge based on what we calculate. For example, I have used Tensorflow to demonstrate how to detect cracks in concrete by automatic image identification. | |||

For example, the same can be used to do land use classification at a much better accuracy level and higher scalability. (See this, this and this But that's for later. | |||

(After reading, click on the cross (top right) to get this message out of the way.) | |||

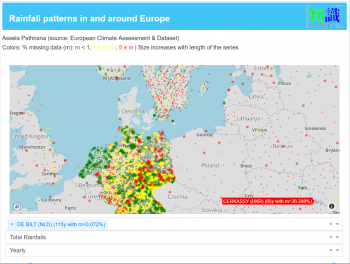

===[http://er.srv.pathirana.net/ Precipitation records of Europe]=== | |||

<div style="overflow: hidden">[[File:raingauge_stations_europe.png|center|thumb|center|350px|Interactive map, analysis and plotting tool for precipitation. [http://er.srv.pathirana.net/ LINK]]] | |||

[https://www.ecad.eu/ European Climate Assessment & Dataset project] managed by Royal Netherlands Meteorological Institute (KNMI), collects meteorological data (pressure, humidity, wind speed, cloud cover and precipitation - see [https://www.ecad.eu/dailydata/datadictionaryelement.php here] for the complete description) from thousands of observation stations from (at the time of writing) 63 countries. As of September 2019, this database includes observations from 57312 stations. | |||

We extracted the precipitation data from this dataset and provide it with a web application where the user can explore, do some simple trend analyses and download, downsampled data (Annual and monthly). | We extracted the precipitation data from this dataset and provide it with a web application where the user can explore, do some simple trend analyses and download, downsampled data (Annual and monthly). | ||

===[http://lcc. | [http://er.srv.pathirana.net/ LINK] | ||

<div style="overflow: hidden">[[File:life_cycle_costing_tool_python.png|thumb|center|350px|Life-cycle cost calculator. [https://lcc. | |||

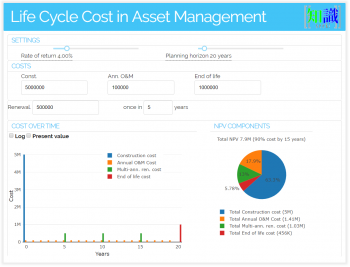

===[http://lcc.srv.pathirana.net/ Life-cycle Costing Tool]=== | |||

<div style="overflow: hidden">[[File:life_cycle_costing_tool_python.png|thumb|center|350px|Life-cycle cost calculator. [https://lcc.srv.pathirana.net/ LINK]]]</div> | |||

One of the routine tasks of Infrastructure Asset Management is to calculate the 'total cost of ownership' of an asset, for example, a building, a bridge or barrage. This involves consideration of the cost of purchase or construction, annual operation and maintenance, periodic renewal and sometimes the ultimate cost of disposal. These costs are all brought to the [https://en.wikipedia.org/wiki/Net_present_value present value (PV)] and aggregated. | One of the routine tasks of Infrastructure Asset Management is to calculate the 'total cost of ownership' of an asset, for example, a building, a bridge or barrage. This involves consideration of the cost of purchase or construction, annual operation and maintenance, periodic renewal and sometimes the ultimate cost of disposal. These costs are all brought to the [https://en.wikipedia.org/wiki/Net_present_value present value (PV)] and aggregated. | ||

| Line 18: | Line 109: | ||

This app provides a convenient way to play with different cost components and interest rates (that is needed to calculate PV) and understand how that affects the whole life cost. | This app provides a convenient way to play with different cost components and interest rates (that is needed to calculate PV) and understand how that affects the whole life cost. | ||

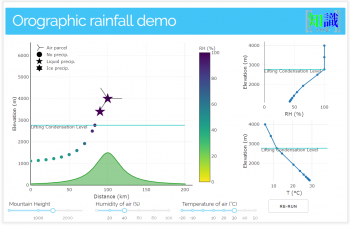

===[http://oro. | ===[http://oro.srv.pathirana.net Orographic lift of wind fields - atmospheric quantities calculator]=== | ||

<div style="overflow: hidden">[[File:orographic_lifting_tool_python.png|thumb|center|350px|Atmospheric quantities with orographic uplift [http://oro. | <div style="overflow: hidden">[[File:orographic_lifting_tool_python.png|thumb|center|350px|Atmospheric quantities with orographic uplift [http://oro.srv.pathirana.net/ LINK]]]</div> | ||

This is an educational tool to demonstrate the interaction of wind fields with mountains. The user can change the mountain height, humidity and temperature of the air and observe how they contribute to the formation of precipitation (liquid or sometimes ice/snow). | This is an educational tool to demonstrate the interaction of wind fields with mountains. The user can change the mountain height, humidity and temperature of the air and observe how they contribute to the formation of precipitation (liquid or sometimes ice/snow). | ||

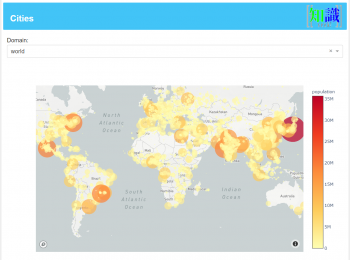

===[http://citypop. | ===[http://citypop.srv.pathirana.net/ Urban population of the world]=== | ||

<div style="overflow: hidden">[[File:citypop_tool_python.png|thumb|center|350px|Urban population of the world. Selected 13000 urban centres from around the world. [https://citypop. | <div style="overflow: hidden">[[File:citypop_tool_python.png|thumb|center|350px|Urban population of the world. Selected 13000 urban centres from around the world. [https://citypop.srv.pathirana.net/ LINK]]]</div> | ||

Using the curated dataset provided by [https://simplemaps.com/data/world-cities simplemaps] website, this plot shows the urban population of the world. Note: This dataset does not cover all the populated places. It covers almost all major cities and towns, but the coverage of smaller places could be uneven. | Using the curated dataset provided by [https://simplemaps.com/data/world-cities simplemaps] website, this plot shows the urban population of the world. Note: This dataset does not cover all the populated places. It covers almost all major cities and towns, but the coverage of smaller places could be uneven. | ||

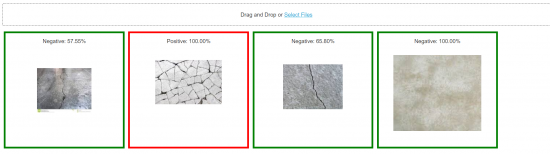

===[https://cp. | ===[https://cp.srv.pathirana.net/ Concrete crack detection with CNN]=== | ||

In deep learning, a convolutional neural network (CNN) is a class of deep neural networks, most commonly applied to analyzing visual imagery.[https://en.wikipedia.org/wiki/Convolutional_neural_network] | In deep learning, a convolutional neural network (CNN) is a class of deep neural networks, most commonly applied to analyzing visual imagery.[https://en.wikipedia.org/wiki/Convolutional_neural_network] | ||

| Line 43: | Line 134: | ||

</gallery> | </gallery> | ||

[[File:concrete_crack_results.png|thumb|center|550px|Crack detection in concrete with tensorflow [https://cp. | [[File:concrete_crack_results.png|thumb|center|550px|Crack detection in concrete with tensorflow [https://cp.srv.pathirana.net/ LINK]]] | ||

</div> | </div> | ||

If you don't have such images, just search the web and download a few. Then [https://cp. | If you don't have such images, just search the web and download a few. Then [https://cp.srv.pathirana.net/ go to the app] and upload them. (You can drag and drop them onto the app as well. | ||

Or here is a sample (I just harvested from the web) to start with. Download the zip file, extract and drag and drop all the image files to the app! ([[file:webapps_with_python_concrete_images_sample_12.zip|zipfile]]) | |||

===[https://web2py.pathirana.net/urbangreenblue/default/index A simple front-end to a urban drainage/flood model]=== | ===[https://web2py.pathirana.net/urbangreenblue/default/index A simple front-end to a urban drainage/flood model]=== | ||

| Line 60: | Line 152: | ||

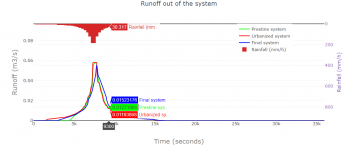

<div style="overflow: hidden">[[File:web2py swmm interface.png|thumb|center|350px|SWMM model results under different catchment conditions [https://web2py.pathirana.net/urbangreenblue/default/index LINK]]]</div> | <div style="overflow: hidden">[[File:web2py swmm interface.png|thumb|center|350px|SWMM model results under different catchment conditions [https://web2py.pathirana.net/urbangreenblue/default/index LINK]]]</div> | ||

===[https://rwh1. | ===[https://rwh1.srv.pathirana.net/ Calculating Rainwater Harvesting Potential for Small Islands]=== | ||

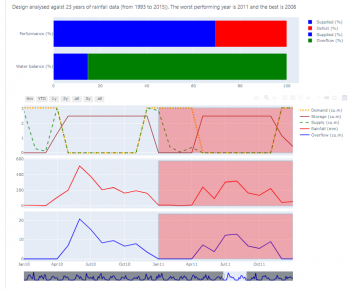

The user provides several input parameters: roof area over which the rainwater is collected, the size of tank planned and the demand – how much water is planned to be used for how many months (e.g. during dry period). Based on user’s location the tool will automatically select historical rainfall data from the nearest meteorological station. Then it simulates the harvesting and usage performance for several decades. Such calculations involving multiple years (for the Maldives this can be about 20-30 years in most cases) gives much better indication of the working of a rainwater harvesting system than just assessing it against one or two years of data. Like many other climates of the world the climate of the Maldives also shows great deal of ‘inter-annual’ variability. Simulations involving a longer period therefore gives a more realistic picture of the performance of the system in the backdrop of such variability. | The user provides several input parameters: roof area over which the rainwater is collected, the size of tank planned and the demand – how much water is planned to be used for how many months (e.g. during dry period). Based on user’s location the tool will automatically select historical rainfall data from the nearest meteorological station. Then it simulates the harvesting and usage performance for several decades. Such calculations involving multiple years (for the Maldives this can be about 20-30 years in most cases) gives much better indication of the working of a rainwater harvesting system than just assessing it against one or two years of data. Like many other climates of the world the climate of the Maldives also shows great deal of ‘inter-annual’ variability. Simulations involving a longer period therefore gives a more realistic picture of the performance of the system in the backdrop of such variability. | ||

<div style="overflow: hidden">[[File:rwh calculator results.png|thumb|center|350px|Rainwater harvesting calculator results.[https://rwh1. | <div style="overflow: hidden">[[File:rwh calculator results.png|thumb|center|350px|Rainwater harvesting calculator results.[https://rwh1.srv.pathirana.net/ LINK]]] </div> | ||

[https://rwh1. | [https://rwh1.srv.pathirana.net/ LINK] | ||

===[https://infil1. | ===[https://infil1.srv.pathirana.net/ Groundwater recharge calculator]=== | ||

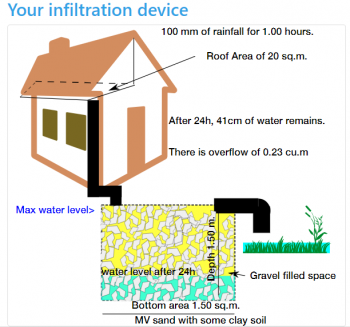

The tool allows the user to choose this by providing the hourly rainfall rate and number of hours over which that intensity will continue. After providing the size of the infiltration pit, whether it is filled with gravel or just empty and the surrounding soil type, the user can calculate the performance of the system. The results are provided as a graphic as well as graph of water level in the structure and any overflow. | The tool allows the user to choose this by providing the hourly rainfall rate and number of hours over which that intensity will continue. After providing the size of the infiltration pit, whether it is filled with gravel or just empty and the surrounding soil type, the user can calculate the performance of the system. The results are provided as a graphic as well as graph of water level in the structure and any overflow. | ||

<div style="overflow: hidden">[[File:infil calculator results.png|thumb|center|350px|Groundwater recharge calculator results[https://infil1. | <div style="overflow: hidden">[[File:infil calculator results.png|thumb|center|350px|Groundwater recharge calculator results[https://infil1.srv.pathirana.net/ LINK]]] </div> | ||

[https://infil1. | [https://infil1.srv.pathirana.net/ LINK] | ||

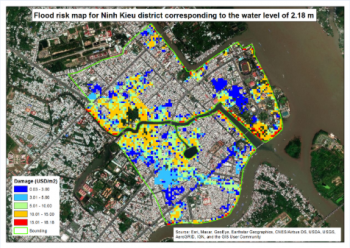

===[http://fg.srv.pathirana.net/ Flood Hazard and Risk Simulator]=== | |||

<div style="overflow: hidden">[[File:flood calculator results.png|thumb|center|350px|Flood Hazard and Risk Simulator Results. [https://infil1.srv.pathirana.net/ LINK]]] </div> | |||

[http://fg.srv.pathirana.net// LINK] | |||

Latest revision as of 05:24, 1 February 2023

Webapps with python

For a long time, the tradition of providing technical solutions was the experts to do the designs and implement the designs for the benefit of the society. At a later stage, we started practising ‘awareness raising’. While this was a step in the right direction, by attempting to describe the why’s what’s and how’s of a technical solution to the societal stakeholders, awareness-raising often worked as an afterthought. A large body of evidence has shown that the best outcomes can be achieved by involving the community from day one of a technical solution. This day one is the planning and design stage. Whether it is money management in a family, a piece of policy in a government or an institution or any type of technical solution, best stakeholder support is obtained when people co-own the product. The journey to co-ownership starts with co-discovery (of knowledge), co-design and implementing together.

This applies to any type of technical solution. However, it becomes a non-negotiable requirement for success in climate and nature-based solutions. By their very nature, both the problem and its solutions are distributed in nature. Delivery of electricity from a customer from a thermal power plant also involves dealing with the ‘users’ – we call this customer management. But when we go for household level, grid-connected, solar electricity generation, that customer must become a business partner! That is the transformation we are witnessing in many sectors addressing problems with climate and nature-based solutions. This is how people are empowered to do designs. The pandemic is a portal, to make the wrongs right, and to build back better and greener.

One of the surefire ways of creating co-ownership is to encourage co-discovery and co-design. For this, we face the challenge of bringing modern technological knowledge to the stakeholders, including communities, in an understandable way but yet allowing for them to interact and contribute in a meaningful sense. One of the modern tools that contribute to this mission is interactive web applications. They allow water managers and scientists to bring complex data analysis solutions, big-data technologies, dynamic water models closer to the non-specialist stakeholders in an appealing and simple-to-interact fashion.

Demonstrations

Here are some prototype web applications that were created for the water management, agriculture and asset management sectors. They are written in python using libraries like Plotly dash and web2py to do the frontend. I use docker containers based on dokku -- a PaaS (Platform as a Service) --to host these apps.

Geohacking publications with Python Natural Language Processing and Friends

What is this and how it works

Using Natural Language Processing to 'Geoparsing' google scholar search results. Select a keyword (or more) and see where the publications refer to. Click on a bubble of a country to see its publications listed below the map.

Background

Python provides all the tools needed to do Natural Language Processing, including

- Web scraping e.g. BeautifulSoup

- Parsing and identifying entities e.g. NLTK toolkit

- Flag geographical locations mentioned in the text and geolocating them (Geoparsing). e.g. Mordecai, geography3 and

(simple) geotext.

What it does

- Downloads Google Scholar search hits for each keyword (In this demo, I have limited each to 500 top hits, to keep things simple)

- Store them in a NoSQL database (MongoDB)

- Run a geoparser (geotext in this case) to locate mentions of countries in the title or the abstract.

- Feed the data to this app, so that the user can interactively look at them.

How to use

- (After closing these instructions) Select a keyword. The locations of the publications will be shown on the map. A list of all the publications will be shown below the map.

- Click on the bubbles on the map to filter by country. Then the list will be updated to cover only that country.

- It is possible to select more than one keyword (simply select from the dropdown list)

- It's also possible to select several countries. Either SHIFT+Click on the map or use the select tools (top-right).

- Click on the link below each record to see it on google scholar.

What is missing

- Many publications concerning the United States of America, typically does not write the country name (e.g. A statewide assessment of mercury dynamics in North Carolina water bodies and fish).

NLP tools are usually smart enough to detect these (North Carolina is in the USA so tag as 'USA'), but the current (demo) implementation misses some obscure names.

- 'The United Kingdom vs. England' tagging is complicated. This issue has to be fixed (That's why no articles are tagged for England).

Next step?

This demo provides a framework for Natural Language Processing of online material to make sense of information (e.g. geoparsing). It combines several Big-data constructs (Unstructured data, NoSQL (Jason) data lakes, NLP tricks). While a web app is not the right place to scale up these to the big-data level, the framework presented here can easily be implemented to do large-scale processing using a decent cluster computer system.

With large scale applications some of the possibilities are:

- Identify temporal trends in publications.

- Locate 'hotspots' as well as locations with few (or no) studies (geographical gaps)

Seasonal levels in waterbodies using Sentinel-2 data

End-to-end automated system for calculating seasonal water availability in practically any waterbody.